Next Generation Data Center Security: The Cornerstone of Web3?

0. Next generation data center

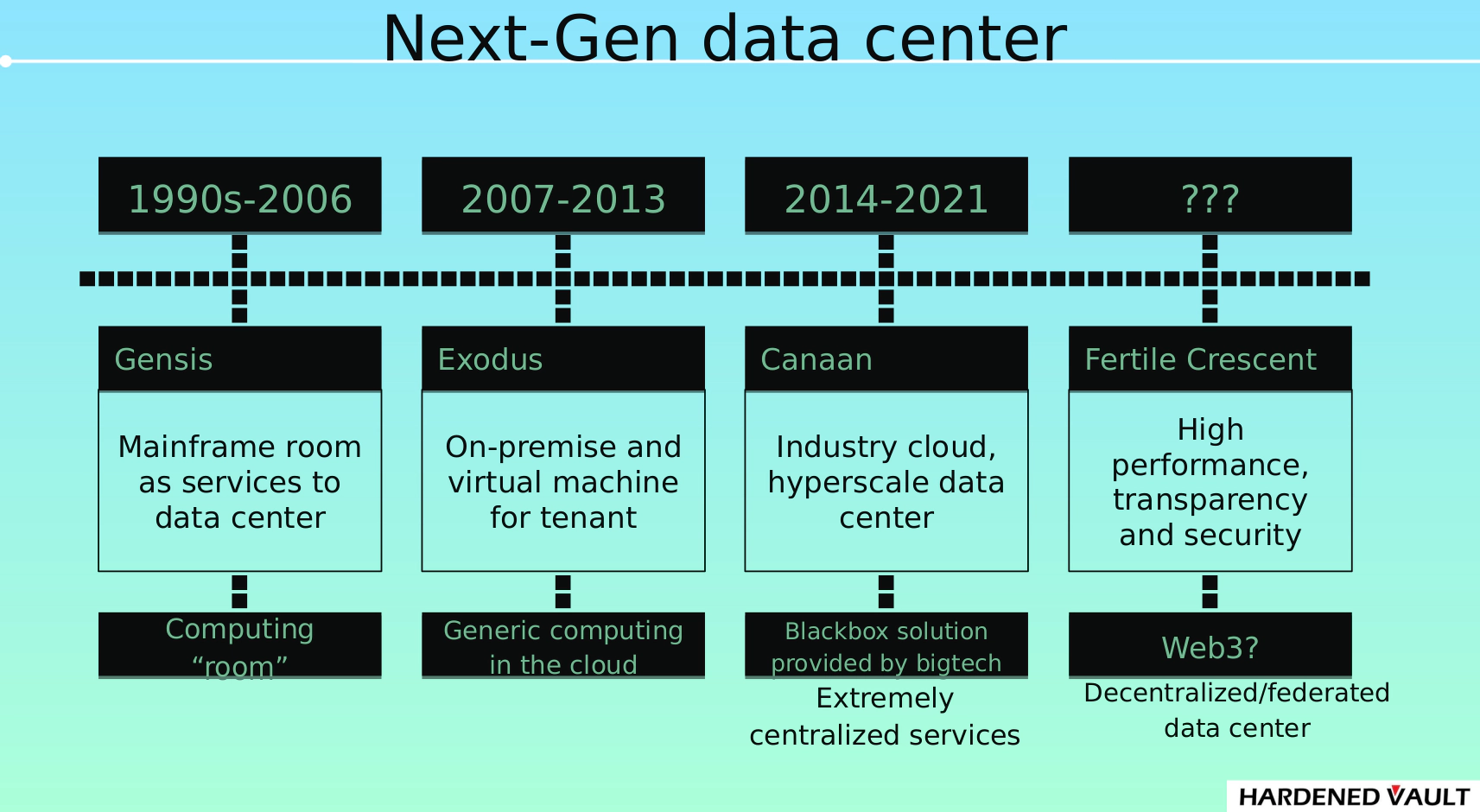

What is the next generation data center? Different perspectives get different answers. The community has already discussed this issue from the perspective of environmental protection, performance and decentralized edge computing. This write-up is mainly focus on the security and decentralized attributes of next-generation data center. We’re probably need to go back and figure out what “previous generations” are before we dive into “next generation”. Just look at the development history of the data center. From a computing perspective, here we simply divide the development of data centers into four stages.

- v1.0: The era of “Computing room” from the 1990s to 2006 included mainframe, Minicomputer, and x86 general-purpose computers.

- v2.0: The generic computing cloud era from 2007 to 2013, the business model is tenant-oriented, which the user is able to rent either bare-metal server or Virtual Machine.

- v3.0, The era of the industry cloud in 2014-2021, the high value-added industry cloud have been born when CSP (cloud service provider) have become mainstream. Hyperscale data center controlled by CSPs that leads to centralization.

- v4.0: From 2022 to the future era of Web3 (? ), decentralization/federation are reshaping the way of how computing and storage being delivered to the user.

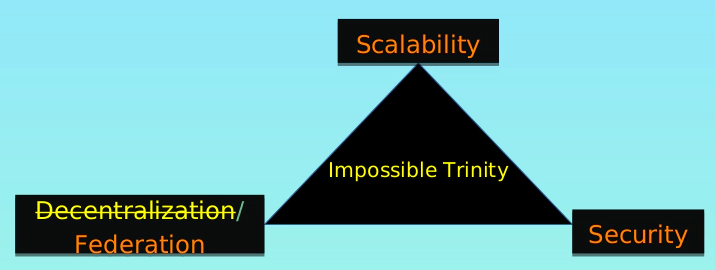

1. Impossible trinity: decentralization vs. federation

Impossible trinity are: Scalability, Decentralization and Security.

Unfortunately, any system can meet only two of them. For example, the extreme decentralized solutions like BTC (Bitcoin) and XMR (Monero) give up on scalability in the beginning that makes BTC/XMR technical architecture unable to provide the complex services. The presence of layer 2 is inevitable, but layer 2 has several main security problems:

- Security and scalability are also important for decentralized systems. The risk level of super node can be reach the same level as a typical centralized service (e.g: web server). (Note: HardenedVault nodes can be immune from classic EOS super node vulnerability attacks)

- Design flaws and buggy implementation in cross-chain protocols.

- Supply chain risks, e.g: backdoor implant by developer or malicious group, build infrastructure need be hardened properly (SLSA Leve 3), etc.

The impossible trinity may become possible if the system is willing to sacrifice some levels of the decentralized property and adopt a federated architecture. Of course, the next generation data center is an important condition as well.

2. The future of Internet

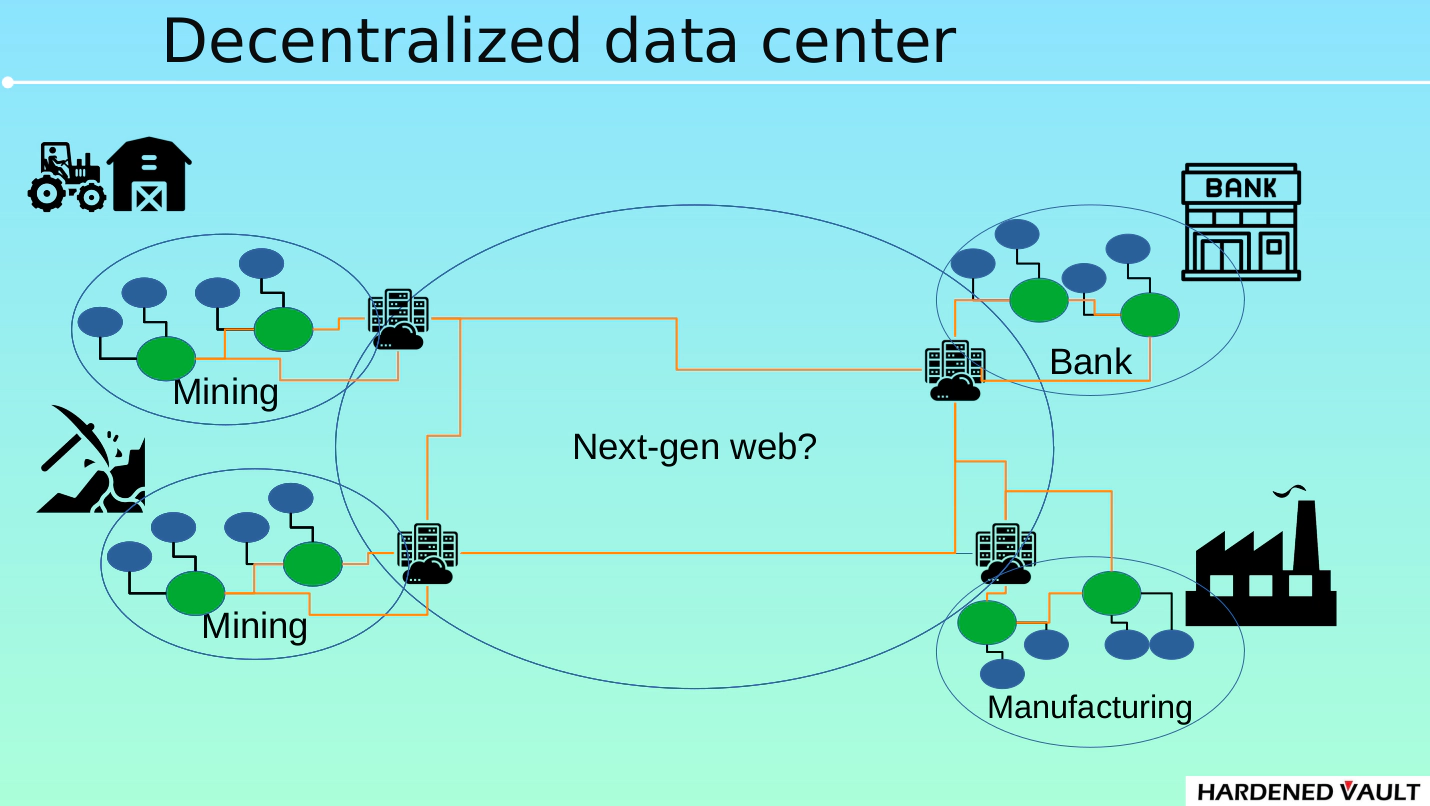

If you ask some random 0ldsk00l hackers or cypherpunks if they’re happy with the internet today, you’ll get a negative answer. Well, it’s the same answer from Vault Labs. Everyone has different expectations of which form the Internet “should” have but 0ldsk00l hacker and cypherpunks will never agree to a centralized model. Here we try to depict an ideal scenario:

- The figure shows that there are A an agricultural town, B a financial town, C a mining town, and D a factory town.

- The communication of 4 small towns is not in 100% decentralized architecture, but federated architecture. The blue circle represents application node and green one represents a federated server. Each application node can communicate with other application node and the physical location of the application node could be in any data center in these towns. Federation is a trade-off between 100% decentralization and scalability/efficiency. This is probably the major form of the next generation of the Internet (whether it is called Web3 or not). On the other hand, some applications, such as Bitcoin or Monero, should keep maintain extremely decentralization. In the context of distributed ledger technology (blockchain), federated servers are similar to super nodes, which should disclose security profile, e.g: our previous project Security Chain requires all super nodes which tends to provide services must disclose their security profile on the ledger.

- These towns are using fourth-generation data center. Each single-node server (for example, a server equipped with AMD EPYC3 can provide more than 4000 processor cores per cabinet) has high performance which will decrease the number of racks in the data center. On the other hand, a federated architecture also benefits from the high performance of any single node.

- Server firmware must be open source. Although it cannot guarantee security directly, but its auditability can reduce backdoor risk.

- If users deploy servers in their basement, then physical security is guaranteed, at least the risk of Evil Maid is reduced, but if users want to deploy the on-premise in any data center in these towns, then hardware-level security features can be helpful. The user can check whether their machines are running in an “expected” state by triggering remote attestation.

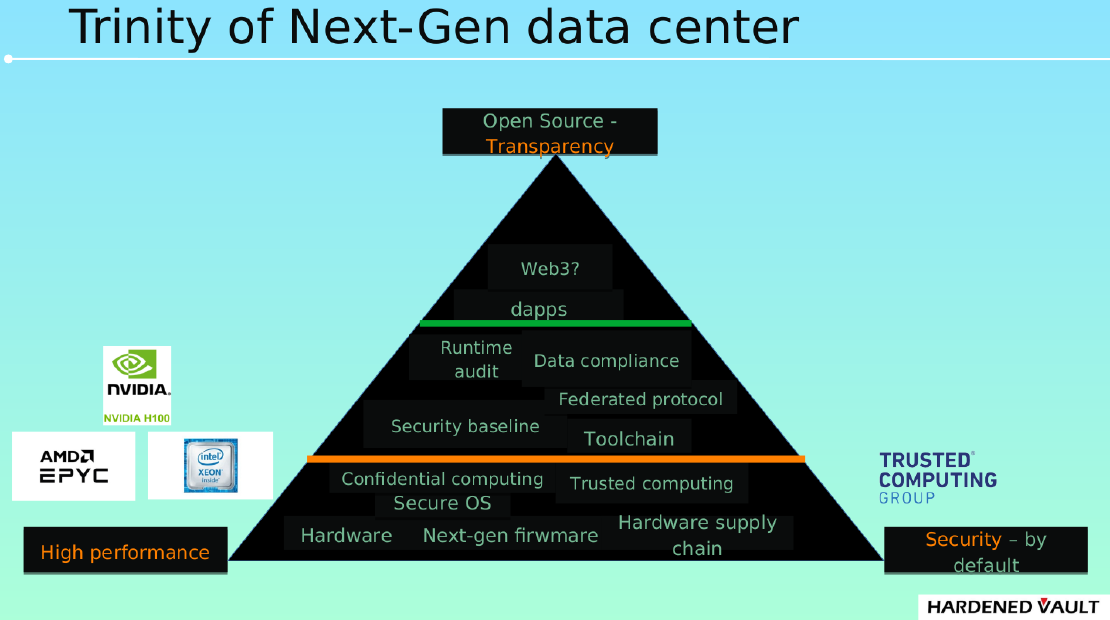

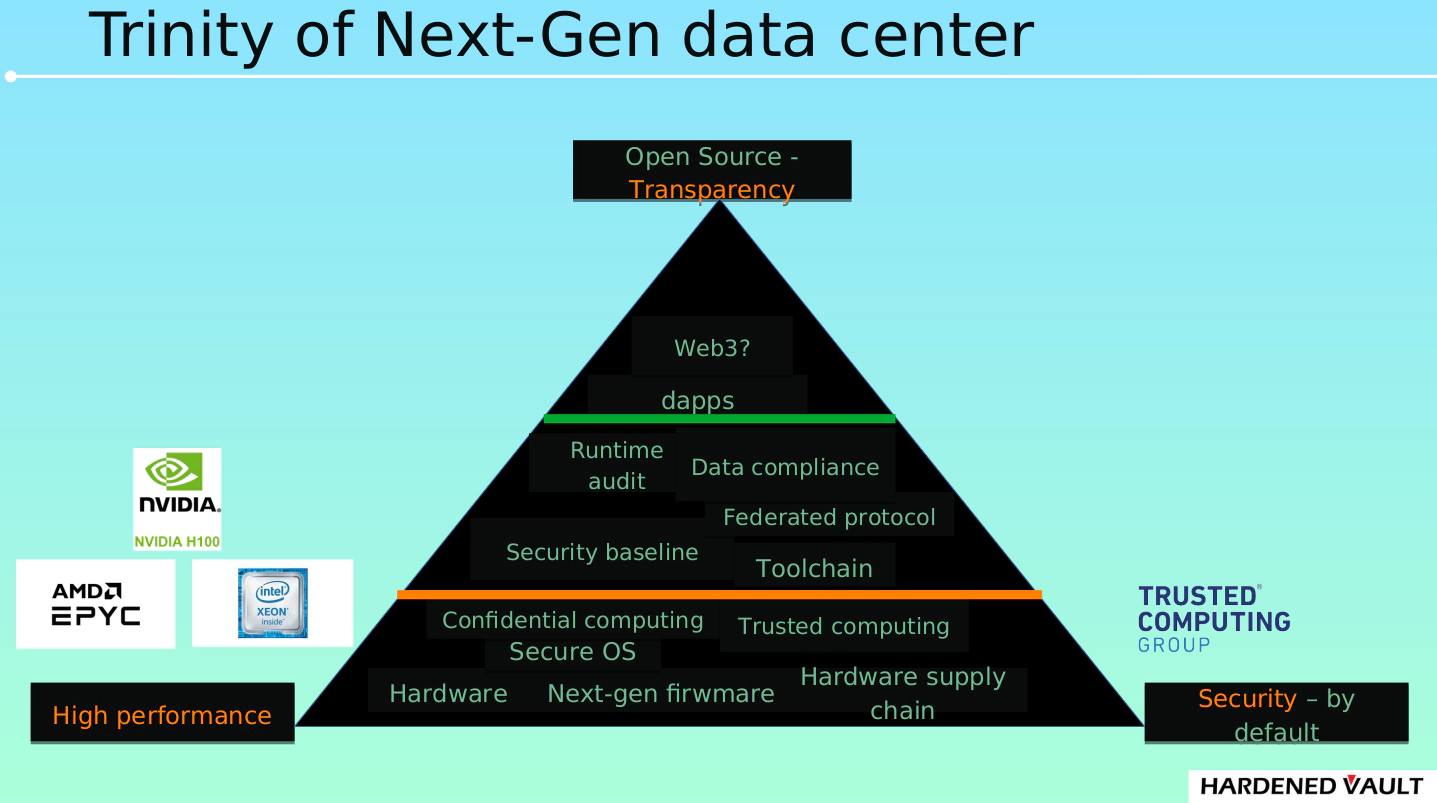

3. The trinity of next generation data center

The core of the data center is computing and storage. One node of the next generation data center (computing server or storage unit) must have high performance, high security and high transparency (open source).

This article will focus on transparency and security. In terms of performance, there are many materials about performance benchmark for AMD EPYC3 and AWS graviton.

3.1 Transparency

Here we look at transparency from four categories (Hardware, Firmware, Operating system, Application), that is, the gap between open source implementation and the actual production environment.

In terms of hardware architecture, the current mainstreams are x86 and arm64. The latest x86 is represented by Intel (10nm) and AMD (TSMC 5nm), and arm64 represented by AWS graviton 3, but these are all designed and manufactured in a closed-source manner. Unfortunately, the fully design and manufacture used by open source is Libresilicon 500 nm (decades behind the industry). A good news is OpenROAD as a great open source toolchain project is making some progress but it’s still lack of physical library support. It is impossible to reach the same level as the latest (5nm) in near future. Although many people have high expectations of RISC-V, the reality is that the openness of RISC-V is only in ISA (Instruction set architecture) level. Please noted that the openness of CPU IP and other peripheral IP is pretty much the same as x86/arm64. Anyway, the transparency in hardware is very low.

Firmware is special software and is closest to hardware. For a long time, mainstream firmware has been occupied by BIOS/UFI. Because of its ecological problems, which cannot be solved, it poses a serious threat to security. The next-generation datacenter adopts a more succinct firmware architecture: coreboot. Currently, Bigtech like Google/Meta/ByteDance primarily promote coreboot as a standard component for server firmware. HardenedVault has also launched vaultboot, a firmware security solution support both coreboot and UEFI. Vaultboot is taking care of security provisionings including hardware level features like trusted (TPMv2.0/TXT/CBnT) and confidential (SGX/TDX/SEV) computing. Firmware transparency is much higher than hardware, but there are still many components without an open source implementation, such as Intel FSP, microcode, and Intel CSME/AMD PSP.

The transparency of operating system and application software is good enough already.

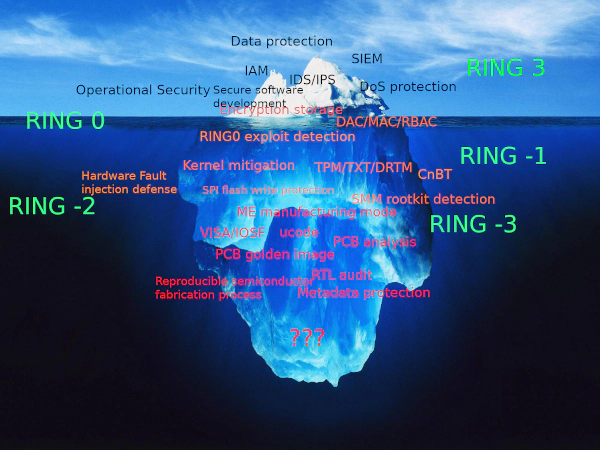

3.2 High security

Advanced security protection is one of the core features for next generation of data center nodes, because the security boundaries no longer exists in federated or decentralized network. The “Plug-in” security solution (such as antivirus software, HIDS, EDR, etc.) is not able to face the challenges today. It’s time to look back to the 0ldsk00l “Build Security In” philosophy, that is, to strengthen the system’s self-protection which is bit of like “vaccine”. It gets a fancy name today: Resiliency. The strong self-protection capabilities within the system may save the security engineers from the cycle of writing rules/tests for various security solution. The vaccine-like solution is what next-gen data center need:

- VED (Vault Exploit Defense) or other vaccine-like Linux kernel threat mitigation against container escape, privilege esclation and rootkits.

- Little tweaks in JVM exentions with cryptography engineering can mitigate Java deserialization vulnerablity like Log4Shell.

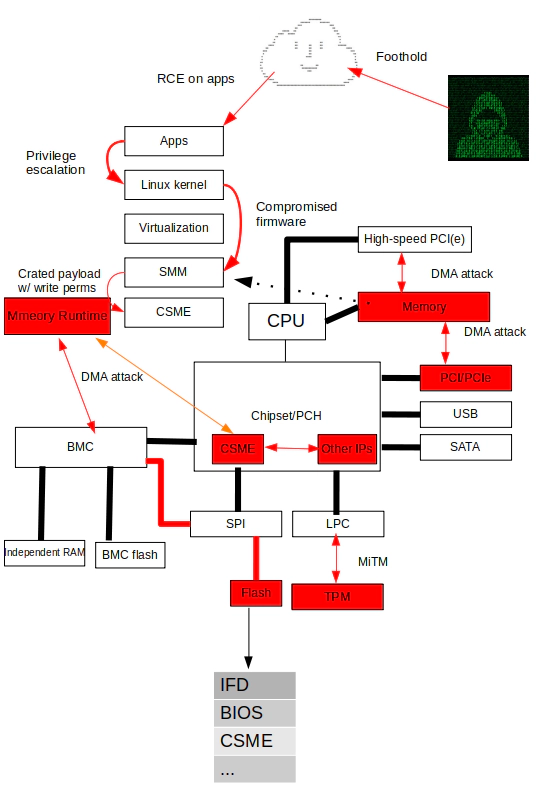

Let’s take a look two critical threat models. The first one can be launched remotely:

Attacking path :

- Ring 3 exploits (webshell or remote exploitation targarted on applications) gain normal execution privileges.

- Exploiting the Ring 0 kernel vulnerability for container escape and priviledge escalation. Please note that although the operating system kernel is no longer the “CORE” in the context of “Attacking the CORE” back in 2007. It’s still very important because the kernel is the entry to lower levels.

- From the current situation (threat intelligences and attack examples), there is no need to pay too much attention to the Ring -1 virtualization level.

- By bypassing the chipset protection mechanism or physical attacks to compromise Ring -2 firmware, such as SMM, to achieve the ability to write SPI flash.

- Triggering the early startup phase of Ring -3 (CSME) (RBE, kernel, etc. that cannot be closed in the >= CSMEv11 version) or the 0day or known vulnerability of the CSME code module (such as SA-00086) to gain full control of the CSME, an attacker can use the CSME as a springboard to enable VISA to access the internal interface of the PCH.

The second one is Evil Maid attacks, that is, the attacker is able to approach your machine and launch the physical attacks:

- DMA attacks by utilizing USB3380 or FPGA based on Thundercap template. For starter, try PCILeech

- DIY the cheap hardware to launch MiTM (Man-in-the-middle) attack at TPM (Trusted Platform Module)

- Emulated keyboard via USB HID

- Overwrite specific bytes/sections of firmware by cheap SPI programmer

3.2.1 Breaking the habit: Vault Paradox

It’d still be very difficult for an organization to figure out the actual risk of firmware/hardware due to the loop we called “Vault Paradox”. The complexity of system security usually leads to wrong threat model even if an organization with comparatively “unlimited” resources (megacorp/bigtech, state actors, etc). Let’s say how this “Vault Paradox” might happens to your organization:

-

Assuming that an attacker’s goal is to gain kernel privileges and implant a kernel rootkit and the attacker achieves its goal after breaking through the application layer and kernel layer protection but they will not attack the next layer. Even if the forensics can prove that the attacker only had footprint in kernel but this does not prove that the attacker is not able to compromise the lower level, e.g: virtualization, SMM, and CSME. The typical decision maker can be blinded by this kind of forensics report and trying nice to be numb (I don’t care) and ignorance (I don’t know).

-

Assuming that an attacker has compromised the UEFI/SMM firmware and performed a successful persistent, and if the attacker does not conduct large-scale operations and is simply trike the high-value targets, such attacks are difficult to detect, which cost much more to do the forensics than kernel.

-

Assuming that an attacker has compromised most of the IPs in PCH, including CSME and performed a successful persistent. Most detection and forensics will fail by this stage. So the system is often considered secure by organization’s decision makers.

Let’s say, even if users have a full-spectrum threat intelligence resources, it is still difficult to conclude whether they should deal with the above two critical threat models, because the threat model can’t rely on the “incomplete” puzzles. In fact, node security in the next generation data center must meet the maximum immunity to the those threat models by strengthen the self-protection capability (Resiliency), otherwise nobody would expect to use it as ideal use-case described in 4-town story.

Missing pieces in Cypherpunks: Machine privacy

Protecting the machine’s privacy is a simple idea. God created man in his own image (Genesis 1:27) and we created machine by reverse engineering the nature (Scientific research). If we need privacy protection, how about our creation? The real-life examples demonstrated that the high frequency communication between machines are prevail in either internet application and industrial 4.0. Think about the 4-town model, should we protect the machine/node’s privacy if billions of nodes running in the decentralized/federated networks? vault1317 is the prototype for that use-case. Perhaps we’re way too early for the party;-)

Wrap-up: The desert of the real

The future of internet, the ultimate form of Web3 and next-gen data center are exciting topics. The challenges are getting involve with almost all major areas of infrastructure and platform security, including hardware, firmware, operating systems, secure communication protocols and so on. Hackers and the cypherpunk community are once again on the expedition. The future is full of possibilities and uncertainty but it’s going to end up with the emergence of each individuals choice. Speaking of individuals, hackers believed that the individual is the key to the future. The strength of a person’s spirit would then be measured by how much ’truth’ he could tolerate (Friedrich Nietzsche) and this goes back to the choice between red and blue pills: The desert of the real or the glory of the illusion. Let’s wrap it up with the desert of the real. May all 0ldsk00l hackers and cypherpunks stick with their sacred vision in the secular world!